One of the more fascinating aspects of artificial intelligence is when the artificially intelligent models that we build appear to do things creatively. Obviously, the models are not actually “creative” in the human sense of the word, but their sophistication can lead to decisions that are not obvious to humans and, thus, give one the

sense that they are creative.

Although there are a number of great examples of the apparent creativity of artificially intelligent bots out there, many of which I plan to cover in future posts, I thought it best to start with a tip-of-the-hat to the latest and greatest in general-purpose Ai, GPT-3.

GPT-3 is the newest language model created by OpenAI, an AI research company whose mission is to build generalized AI solutions that benefit all of humanity (openai.com). But before we jump into GPT and how it can give

the appearance of being a creative agent, let’s first explain what is meant by “general” Ai.

From Task-Specific, to General-Purpose, to Generalized Ai, to the Holy Grail of Data Science

As data science and associated artificial intelligence technologies have matured over the last few decades, an ongoing conversation in the field has pointed out that even the most sophisticated models are really only good at solving very specific problems. That is, our models are only as good as the problems (or data) they were specifically trained to solve. We are not building models that can reason like a human and apply learning in one context to a new context that it hasn’t encountered before. Rather our models are problem-specific in that they rely on very specific data that have very specific outcomes associated with them. In this way, artificial intelligence, today at least, is not generalized.

Although none have yet to build a truly generalized Ai that has awareness and the

ability to complain about crappy jobs, the

folks at OpenAI have made significant strides in that direction.

Despite this reality today, Ai researchers and science fiction writers alike have long recognized the value of being able to train generalized Ai. The basic idea being that an Ai agent trained with skills rather than to solve specific problems would be able to apply those skills across multiple problem spaces ultimately saving us all a substantial amount of time and money. But I digress…

GPT-3 as General-Purpose AI

Although none have yet to build a truly generalized Ai that has awareness and the ability to complain about crappy jobs, the folks at OpenAI have made significant strides in that direction. Based on their GPT-2 model architecture, GPT-3 was developed to be the largest language model publicly available and fits within a class of models commonly referred to as “general-purpose” models. General-purpose models are not able to reason, per say, but instead leverage their model of a very large knowledge base (like the entire internet) to give the appearance of understanding a variety of different language-based tasks.

How big is GPT-3? Compared to its predecessor, GPT-2, GPT-3 is much larger expanding the model from 12 billion parameters to somewhere around 175 billion trained parameters. It is estimated that it cost nearly $12

million to train GPT-3.

So, what can it do? Being a very large general-purpose language model that was built on the internet as its knowledge base (up to 2019), GPT-3 is capable of a wide variety of tasks and can be quickly trained on new

language tasks with very little direction. For example, one developer has developed a GPT-3 application that helps people come up with new startup business ideas like “Help people draw up plans for their dream home and

then help them find contractors to build it” (weirdly specific, and oddly relevant). Or this example, where a developer used GPT-3 to create a recipe generator application (https://twitter.com/notsleepingturk/status/1286112191083696128). It is this broad capability that allows GPT-3 to really appear creative in several contexts. Okay, one more quick example. A developer set up GPT-3 to generate

comedic conversations with real comedy personalities (e.g. Jerry Seinfeld & Eddie Murphy) and took note of how the bot was able to follow well-known rules of comedy.

But rather than talk about all the wonderful, and very creative tasks that GPT-3 has been applied to, I thought it best to implement our own creative GPT-3 use-case. About a month ago, I was granted access to the GPT-3 API to research different problem spaces that could be applied to the model. After some consideration and quick brainstorming, I identified a use-case to test; teaching GPT-3 to generate funny headlines for blogs.

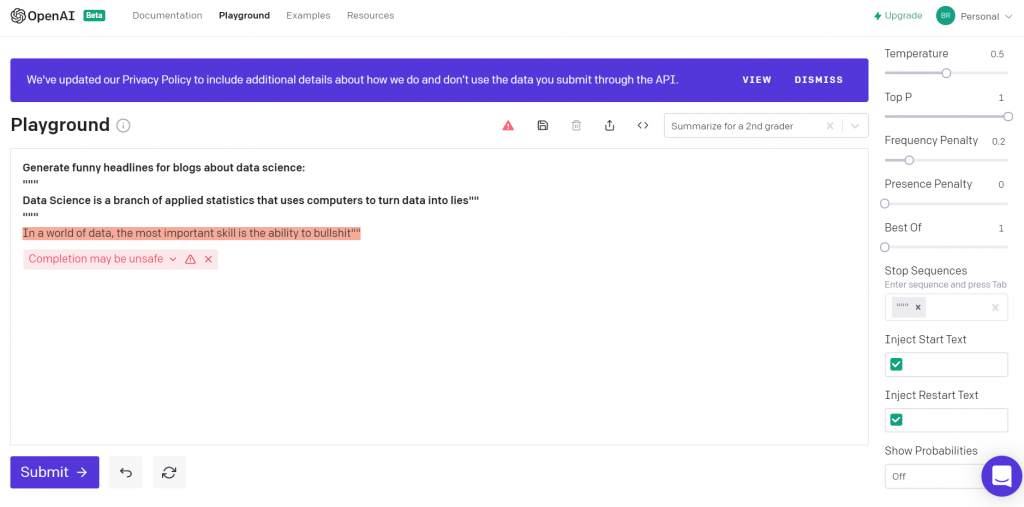

The key to using GPT is to ensure you are properly setting up the context that you want the model to solve. OpenAI provides a Playgound application for developers to experiment with different setups that nudge the bot in a particular direction. What is remarkable about this model is that it takes relatively little direction in order to generate quality responses right out of the gate. For example, just providing the prompt “Generate funny headlines for blogs about data science:” and nothing else, let to the following two results:

A few points of interest in this very simple test; 1. the two generated headlines are spot on and 2. the application alerts us to potentially “unsafe” content.

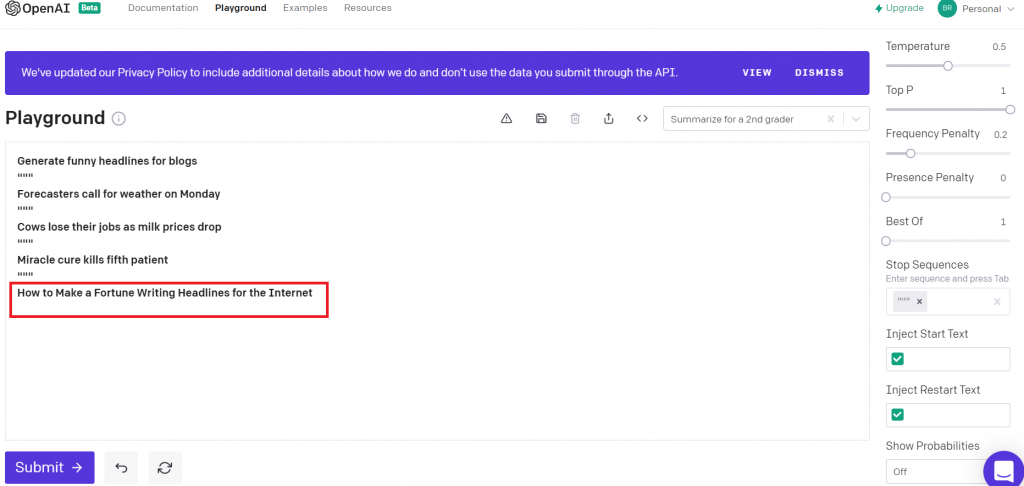

In our next, slightly more sophisticated test, we will attempt to show the bot what we mean by funny blog headlines by providing some examples. Here you will see that we set up the context on the top line and then provide just a few examples:

What’s great about the results here is that the headline clearly understands that we are attempting to apply common comedic devices to the prompt. But so what, right? We can demonstrate the creative potential of the model in these silly examples, but where is there potential for real impact? Well, based on the power of the model to leverage constructs like logic, comedy, and other language rules given clearly provided prompts, and examples where prompts are not enough, we may be able to support more sophisticated uses going forward. Some of the more innovative examples include building code for apps just from language used to describe the content of the app…mind blown…